Extracted SAP Ariba Analytical Reporting API Data Using SAP Integration Suite and Imported into SAP Datasphere

We’ve been recently looking a solution to use SAP Ariba data in SAP Datasphere. First approach / option would be “Oh let’s create an Ariba connection in Datasphere”, right? Hold on a second! Nothing is simple once you come with the Ariba. Simply there is no Ariba connection available in Datasphere! Period! See Preparing Connectivity for Connections

Okay, what can we do? You will need to use SAP Ariba APIs, that’s it! Hmm, great, let me dive into Ariba world and find out how I can use Ariba API.

Solution

Don’t panic, Mackenzie Moylan had published two blog posts, we can use SAP Integration Suite with SAP Ariba API:

- Extracting SAP Ariba Reporting API Data using SAP Integration Suite

- Extracting SAP Ariba Reporting API Data using SAP Integration Suite Part 2 — Sending SAP Ariba Data into SAC

There is a GitHub site too SAP-samples / btp-spend-analysis and just a copy of the GitHub site SAP mission Extract your Ariba Spend Data using SAP Integration Suite that I’ve accomplished.

You may have a long discussion that what we would do with SAP Ariba and extract data and import data with SAP Datasphere, here it is the key:

Current Position — What is the challenge?

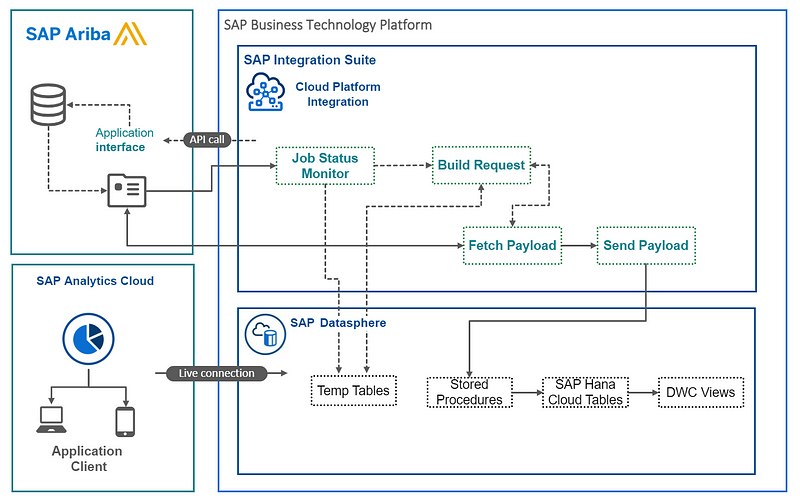

To activate the SAP Ariba Spend Analysis, advanced reporting and analytics add-on for SAP Datasphere and SAP Analytics Cloud, you need to move your Ariba data into Datasphere. Ariba provides an Analytical API for customers to access the data generated by the Spend Analysis entitlement. Due to the format of the API response, there is no direct ingestion mechanism for Datasphere, therefore something needs to be introduced to orchestrate that data movement.

Hence there is no way to avoid using SAP Ariba API. Let’s dive into the all blogs posts, help documents, GitHub sites, missions.

Eventually I’m able to complete the mission successfully.

Crucial Steps

Imported SAP Datasphere business content SAP Ariba Spend Analysis — pretty standard procedure

Created a Database User in SAP Datasphere’s SAP_CONTENT space — check Integrating Data and Managing Spaces in SAP Datasphere > Create a Database User

Created required tables in newly created HANA Open SQL Schema with HANA Explorer, scripts here available: SAP Datasphere Create Table Scripts

Created a Security Material — that’s how’s called — in SAP Integration Suite for Ariba Application of Analytical Reporting API. Basically you will need to create OAuth2 Credentials connection.

Created a JDBC Material aka connection for SAP Datasphere HANA Open SQL Schema.

Downloaded, imported, and deployed integration flows that all are available here: btp-spend-analysis/src/Flows/ — key is here you need to download all files one by one, then as Oliveira Miguel Domingos de pointed out “Unfortunately, how the iFlows were shared on GitHub is not compatible with the Integration Suite import option (via .zip files).” So if you try to import them into SAP Integration flows they will fail. Just manually add .zip extension for each file — I know it’s very cumbersome — then you can import them all successfully into the Integration Suite.

Deployed all integration flows, figure out how to run them, there is a little saying that “Go to the Configure option of each integration flow with a name containing ”1” and change the configuration parameters like dates, APIKEY, and so forth” It turned out a big deal because not only API Key but also some other things like API realm, connection names should be changed in each & every integration flows. Otherwise they will fail! Okay, all done eventually and I’am able to run all of them: Sure there are two main Integration Flows that have to be run (1) SAP_1_Ariba_Dimension_Tables (2) SAP_2_Ariba_Fact_Tables

Monitored and all completed successfully, of course after some back and forth iterations because of errors, maintaining date filters — Ariba API does not like wide range of date filters — ,etc.

Observed all SAP Datasphere Open SQL Schema databases and Data Builder local tables.

Observations

I’ve observed that if I run the integration flow again data in the tables are duplicated, obviously database operation seems to be just Insert, but it should be Upsert (Update / Insert) by considering primary key values in the tables.

Secondly there seems to be a lot of opportunity to improve external parameters of the Integration Flows.

It’s better if you keep your Ariba and HANA Open SQL connection with the same name that as suggested in the guidance for your POC (proof of concept) to avoıd too much changes. Though I recommend your standards and naming conventions later for the connections.

I’d recommend you learning how to use Postman and test Ariba APIs.

There are limitations on Ariba Analytical Reporting API, read the limitations carefully.

There are two methods to extract data with Ariba Analytical Reporting API — (1) Asynchronous (2) Synchronous. Don’t jump into the Synchronous method since it has more limitations. It has been discussed here: “Those limitations and advice are correct from an SAP Ariba Perspective. You will need to use the Asynchronous Analytical Reporting API to fetch larger sets of data. It will be more complex than Synchronous, but that is the advice I would give anyone too.”

Conclusion

That’s great we can use SAP Integration Suite to extract data from SAP Ariba with Ariba Analytical Reporting API and import into SAP Datasphere. The key thing is learning Ariba APIs’ details and design the integration flows maybe in generic way in the Integration Suite so you can manage & monitor easily, and accommodate more data load needs from the Ariba.